Secant method: Difference between revisions

Polluxonis (talk | contribs) No edit summary |

|||

| Line 1: | Line 1: | ||

In [[numerical analysis]], the '''secant method''' is a [[root-finding algorithm]] that uses a succession of [[Root of a function|root]]s of [[secant line]]s to better approximate a root of a [[Function (mathematics)|function]] ''f''. The secant method can be thought of as a [[finite difference]] approximation of [[Newton's method]]. However, the method was developed |

In [[numerical analysis]], the '''secant method''' is a [[root-finding algorithm]] that uses a succession of [[Root of a function|root]]s of [[secant line]]s to better approximate a root of a [[Function (mathematics)|function]] ''f''. The secant method can be thought of as a [[finite difference]] approximation of [[Newton's method]]. However, the method was developed independently of Newton's method, and predated the latter by over 3000 years. [http://www.allacademic.com/meta/p_mla_apa_research_citation/2/0/0/0/4/p200044_index.html] |

||

==The method== |

==The method== |

||

| Line 163: | Line 163: | ||

[[sv:Sekantmetoden]] |

[[sv:Sekantmetoden]] |

||

[[zh:割线法]] |

[[zh:割线法]] |

||

QuickiWiki Look Up |

|||

QuickiWiki Look Up |

|||

Revision as of 00:33, 26 December 2010

In numerical analysis, the secant method is a root-finding algorithm that uses a succession of roots of secant lines to better approximate a root of a function f. The secant method can be thought of as a finite difference approximation of Newton's method. However, the method was developed independently of Newton's method, and predated the latter by over 3000 years. [1]

The method

The secant method is defined by the recurrence relation

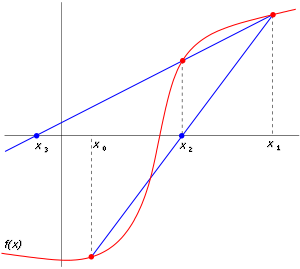

As can be seen from the recurrence relation, the secant method requires two initial values, x0 and x1, which should ideally be chosen to lie close to the root.

Derivation of the method

Starting with initial values x0 and x1, we construct a line through the points (x0,f(x0)) and (x1,f(x1)), as demonstrated in the picture on the right. In point-slope form, this line has the equation

We find the root of this line -- the value of x such that y=0 -- by solving the following equation for x:

The solution is

We then use this value of x as x2 and repeat the process using x1 and x2 instead of x0 and x1. We continue this process, solving for x3, x4, etc., until we reach a sufficiently high level of precision (a sufficiently small difference between xn and xn-1).

- ...

Convergence

The iterates xn of the secant method converge to a root of f, if the initial values x0 and x1 are sufficiently close to the root. The order of convergence is α, where

is the golden ratio. In particular, the convergence is superlinear.

This result only holds under some technical conditions, namely that f be twice continuously differentiable and the root in question be simple (i.e., with multiplicity 1).

If the initial values are not close to the root, then there is no guarantee that the secant method converges.

Comparison with other root-finding methods

The secant method does not require that the root remain bracketed like the bisection method does, and hence it does not always converge. The false position method uses the same formula as the secant method. However, it does not apply the formula on xn−1 and xn, like the secant method, but on xn and on the last iterate xk such that f(xk) and f(xn) have a different sign. This means that the false position method always converges.

The recurrence formula of the secant method can be derived from the formula for Newton's method

by using the finite difference approximation

If we compare Newton's method with the secant method, we see that Newton's method converges faster (order 2 against α ≈ 1.6). However, Newton's method requires the evaluation of both f and its derivative at every step, while the secant method only requires the evaluation of f. Therefore, the secant method may well be faster in practice. For instance, if we assume that evaluating f takes as much time as evaluating its derivative and we neglect all other costs, we can do two steps of the secant method (decreasing the logarithm of the error by a factor α² ≈ 2.6) for the same cost as one step of Newton's method (decreasing the logarithm of the error by a factor 2), so the secant method is faster. If however we consider parallel processing for the evaluation of the derivative, Newton's method proves its worth, being faster in time, though still spending more steps.

Generalizations

Broyden's method is a generalization of the secant method to more than one dimension.

Example code

The following C code was written for clarity instead of efficiency. It was designed to solve the same problem as solved by the Newton's method and false position method code: to find the positive number x where cos(x) = x3. This problem is transformed into a root-finding problem of the form f(x) = cos(x) − x3 = 0.

In the code below, the secant method continues until one of two conditions occur:

for some given m and ε.

#include <stdio.h>

#include <math.h>

double f(double x)

{

return cos(x) - x*x*x;

}

double SecantMethod(double xn_1, double xn, double e, int m)

{

int n;

double d;

for (n = 1; n <= m; n++)

{

d = (xn - xn_1) / (f(xn) - f(xn_1)) * f(xn);

if (fabs(d) < e)

return xn;

xn_1 = xn;

xn = xn - d;

}

return xn;

}

int main(void)

{

printf("%0.15f\n", SecantMethod(0, 1, 5E-11, 100));

return 0;

}

After running this code, the final answer is approximately 0.865474033101614. The initial, intermediate, and final approximations are listed below, correct digits are underlined.

The following graph shows the function f in red and the last secant line in bold blue. In the graph, the x-intercept of the secant line seems to be a good approximation of the root of f.

Matlab Code: function Xs = SecantRoot(Fun,Xa,Xb,Err,imax) % SecantRoot finds the root of Fun = 0 using the Secant method. % Input variables: % Fun Name (string) of a function file that calculates Fun for a given x. % a, b Two points in the neighborhood of the root (on either side, or the % same side of the root). % Err Maximum error. % imax Maximum number of iterations % Output variable: % Xs Solution for i = 1:imax FunXb = feval(Fun,Xb); Xi = Xb - FunXb*(Xa-Xb)/(feval(Fun,Xa)-FunXb); if abs((Xi - Xb)/Xb) < Err Xs = Xi; break end Xa = Xb; Xb = Xi; end if i == imax fprintf('Solution was not obtained in %i iterations.\n',imax) Xs = ('No answer'); end

References

- Kaw, Autar; Kalu, Egwu (2008), Numerical Methods with Applications (1st ed.), http://www.autarkaw.com

{{citation}}: External link in|publisher=

External links

- Animations for the secant method

- Secant Method Notes, PPT, Mathcad, Maple, Mathematica, Matlab at Holistic Numerical Methods Institute

- Weisstein, Eric W. "Secant Method". MathWorld.

- Module for Secant Method by John H. Mathews

QuickiWiki Look Up QuickiWiki Look Up