Eliezer Yudkowsky: Difference between revisions

as I said, nothing indiscriminate about one of his most popular & longest works. |

Please address my many concerns before reverting again, thank you. |

||

| Line 33: | Line 33: | ||

He is a co-founder and research fellow of the [[Singularity Institute for Artificial Intelligence]] (SIAI).<ref name = "SiNnote">{{cite book|author=Kurzweil, Ray|title=The Singularity Is Near|publisher=Viking Penguin|location=New York, US|year=2005|isbn=0-670-03384-7|page=599|authorlink=Ray_Kurzweil}}</ref> Yudkowsky is the author of the SIAI publications "[http://www.singinst.org/upload/CFAI/index.html Creating Friendly AI]" (2001), "[http://www.singinst.org/upload/LOGI//LOGI.pdf Levels of Organization in General Intelligence]" (2002), "[http://singinst.org/upload/CEV.html Coherent Extrapolated Volition]" (2004), and "[http://singinst.org/upload/TDT-v01o.pdf Timeless Decision Theory]" (2010).<ref name = "afuture">{{cite web|url=http://www.acceleratingfuture.com/people/Eliezer-Yudkowsky/|title=Eliezer Yudkowsky Profile|publisher=Accelerating Future}}</ref> |

He is a co-founder and research fellow of the [[Singularity Institute for Artificial Intelligence]] (SIAI).<ref name = "SiNnote">{{cite book|author=Kurzweil, Ray|title=The Singularity Is Near|publisher=Viking Penguin|location=New York, US|year=2005|isbn=0-670-03384-7|page=599|authorlink=Ray_Kurzweil}}</ref> Yudkowsky is the author of the SIAI publications "[http://www.singinst.org/upload/CFAI/index.html Creating Friendly AI]" (2001), "[http://www.singinst.org/upload/LOGI//LOGI.pdf Levels of Organization in General Intelligence]" (2002), "[http://singinst.org/upload/CEV.html Coherent Extrapolated Volition]" (2004), and "[http://singinst.org/upload/TDT-v01o.pdf Timeless Decision Theory]" (2010).<ref name = "afuture">{{cite web|url=http://www.acceleratingfuture.com/people/Eliezer-Yudkowsky/|title=Eliezer Yudkowsky Profile|publisher=Accelerating Future}}</ref> |

||

Yudkowsky's research focuses on Artificial Intelligence designs which enable self-understanding, self-modification, and recursive self-improvement ([[seed AI]]); and also on artificial-intelligence architectures for stably benevolent motivational structures ([[Friendly AI]], and [[Coherent Extrapolated Volition]] in particular).<ref name = "SiN1">{{cite book|author=Kurzweil, Ray|title=The Singularity Is Near|publisher=Viking Penguin|location=New York, US|year=2005|isbn=0-670-03384-7|page=420}}</ref> Apart from his research work, Yudkowsky has written explanations of various philosophical topics in non-academic language, particularly on rationality, such as [http://yudkowsky.net/rational/bayes "An Intuitive Explanation of Bayes' Theorem"]. |

Yudkowsky's research focuses on Artificial Intelligence designs which enable self-understanding, self-modification, and recursive self-improvement ([[seed AI]]); and also on artificial-intelligence architectures for stably benevolent motivational structures ([[Friendly AI]], and [[Coherent Extrapolated Volition]] in particular).<ref name = "SiN1">{{cite book|author=Kurzweil, Ray|title=The Singularity Is Near|publisher=Viking Penguin|location=New York, US|year=2005|isbn=0-670-03384-7|page=420}}</ref> Apart from his research work, Yudkowsky has written explanations of various philosophical topics in non-academic language, particularly on rationality, such as [http://yudkowsky.net/rational/bayes "An Intuitive Explanation of Bayes' Theorem"]. |

||

He contributed two chapters to [[Oxford University|Oxford]] philosopher [[Nick Bostrom]]'s and Milan Ćirković's edited volume ''Global Catastrophic Risks''.<ref name = "bostrom">{{cite book|editor1-last=Bostrom|editor1-first=Nick|editor1-link=Nick_Bostrom|editor2-last=Ćirković|editor2-first=Milan M.|title=Global Catastrophic Risks|publisher=Oxford University Press|location=Oxford, UK|year=2008|isbn=978-0-19-857050-9|pages=91–119, 308–345}}</ref> |

He contributed two chapters to [[Oxford University|Oxford]] philosopher [[Nick Bostrom]]'s and Milan Ćirković's edited volume ''Global Catastrophic Risks''.<ref name = "bostrom">{{cite book|editor1-last=Bostrom|editor1-first=Nick|editor1-link=Nick_Bostrom|editor2-last=Ćirković|editor2-first=Milan M.|title=Global Catastrophic Risks|publisher=Oxford University Press|location=Oxford, UK|year=2008|isbn=978-0-19-857050-9|pages=91–119, 308–345}}</ref> |

||

Revision as of 16:16, 19 June 2011

This article has an unclear citation style. (April 2011) |

This article needs to be divided into sections. (April 2011) |

Eliezer Yudkowsky | |

|---|---|

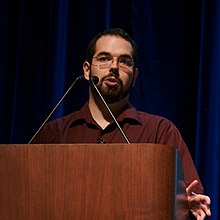

Eliezer Yudkowsky at the 2006 Stanford Singularity Summit. | |

| Born | September 11, 1979 |

| Nationality | American |

| Citizenship | American |

| Known for | Seed AI, Friendly AI |

| Scientific career | |

| Fields | Artificial intelligence |

| Institutions | Singularity Institute for Artificial Intelligence |

Eliezer Shlomo Yudkowsky (born September 11, 1979) is an American artificial intelligence researcher concerned with the Singularity and an advocate of Friendly artificial intelligence[1], living in Redwood City, California.[2]

Yudkowsky did not attend high school and is an autodidact with no formal education in artificial intelligence.[3] He does not operate within the academic system and has not authored any peer reviewed papers.

He is a co-founder and research fellow of the Singularity Institute for Artificial Intelligence (SIAI).[4] Yudkowsky is the author of the SIAI publications "Creating Friendly AI" (2001), "Levels of Organization in General Intelligence" (2002), "Coherent Extrapolated Volition" (2004), and "Timeless Decision Theory" (2010).[5]

Yudkowsky's research focuses on Artificial Intelligence designs which enable self-understanding, self-modification, and recursive self-improvement (seed AI); and also on artificial-intelligence architectures for stably benevolent motivational structures (Friendly AI, and Coherent Extrapolated Volition in particular).[6] Apart from his research work, Yudkowsky has written explanations of various philosophical topics in non-academic language, particularly on rationality, such as "An Intuitive Explanation of Bayes' Theorem".

He contributed two chapters to Oxford philosopher Nick Bostrom's and Milan Ćirković's edited volume Global Catastrophic Risks.[7]

Yudkowsky was, along with Robin Hanson, one of the principal contributors to the blog Overcoming Bias sponsored by the Future of Humanity Institute of Oxford University. In early 2009, he helped to found Less Wrong, a "community blog devoted to refining the art of human rationality".[8]

References

- ^ "Singularity Institute for Artificial Intelligence: Team". Singularity Institute for Artificial Intelligence. Retrieved 2009-07-16.

- ^ Eliezer Yudkowsky: About

- ^ "GDay World #238: Eliezer Yudkowsky". The Podcast Network. Retrieved 2009-07-26.

- ^ Kurzweil, Ray (2005). The Singularity Is Near. New York, US: Viking Penguin. p. 599. ISBN 0-670-03384-7.

- ^ "Eliezer Yudkowsky Profile". Accelerating Future.

- ^ Kurzweil, Ray (2005). The Singularity Is Near. New York, US: Viking Penguin. p. 420. ISBN 0-670-03384-7.

- ^ Bostrom, Nick; Ćirković, Milan M., eds. (2008). Global Catastrophic Risks. Oxford, UK: Oxford University Press. pp. 91–119, 308–345. ISBN 978-0-19-857050-9.

- ^ "Overcoming Bias: About". Overcoming Bias. Retrieved 2009-07-26.

Further reading

- Our Molecular Future: How Nanotechnology, Robotics, Genetics and Artificial Intelligence Will Transform Our World by Douglas Mulhall, 2002, p. 321.

- The Spike: How Our Lives Are Being Transformed By Rapidly Advancing Technologies by Damien Broderick, 2001, pp. 236, 265-272, 289, 321, 324, 326, 337-339, 345, 353, 370.

External links

- Personal web site

- Less Wrong - "A community blog devoted to refining the art of human rationality" founded by Yudkowsky.

- Biography page at KurzweilAI.net

- Biography page at the Singularity Institute

- Downloadable papers and bibliography

- Predicting The Future :: Eliezer Yudkowsky, NYTA Keynote Address - Feb 2003

- Harry Potter and the Methods of Rationality at Fanfiction.net